Who thought that the laws of robotics described by famous science fiction author Isaac Asimov would one day resonate with real life issues on robots? Last week’s summit on artificial intelligence sought to imagine a world increasingly manned by machines and robots, even self-taught ones, and explore the legal, ethical, economic, and social consequences of this new world. And some panellists underlined a need to establish frameworks to manage this new species.

The AI (Artificial Intelligence) for Good Global Summit took place from 7-9 June.

It does not take a big stretch of imagination to realise that life with robots will change quite a few things, and the global summit looked at privacy, security, ethics and societal challenges brought by AI to the future society during a panel held on 8 June.

A Tool with a Mind of Its Own

For Stephen Cave, moderating the session, the AI potential is a master key, a kind of universal tool, which is soon to impact all areas of society. Cave is executive director, Leverhulme Centre for the Future of Intelligence in the United Kingdom.

AI potentially can make new tools, and is autonomous, he said. “We want intelligent machines, which can make decisions for us,” he added. AI can become a tool with a mind in itself.

Joe Konstan, professor at the University of Minnesota, said learning from data can perpetuate discrimination, and there is a need to focus on accessibility and empowerment rather than on speed. He also underlined the importance for AI systems to be understandable.

A vast number of jobs will be potentially replaced by machines in areas such as driving, cleaning, and some types of personal care, he said. What happens to people driven out of a job, with no income, he asked, adding that a potential solution would be a collective share of the profit of AI, or compensation for data provided to the system.

This alternative economic system was presented by Jaron Lanier, computer scientist, and speaking at the World Intellectual Property Organization last year (IPW, WIPO, 21 April 2016).

EU Parliament Calls For Legal, Ethical Framework

Mady Delvaux-Stehres, Member of the European Parliament from Luxembourg, mentioned the January 2017 motion for a European Parliament resolution on legal and ethical implications of robotics.

The motion comes with a report, authored by Delvaux-Stehres, which provides a set of recommendations to the European Commission on Civil Law Rules on Robotics.

She said protocols need to be developed for testing robots before they are admitted to the market. The interaction between robots and humans will produce large amounts of data, but determining to whom the data belong and who can access it is a challenge, she said. Data is a new currency, she added.

There is also a question of liability, who will be liable for the actions of a robot, she asked, adding that a human should remain in the loop. The sharing of responsibilities between all the stakeholders along the line, such as manufacturers, users, data providers, network operators, robots’ owners, is very complicated, she said. That issue will only get worse with self-taught robots, she said.

Who will be the winners and the losers of an increasingly AI-manned society, she asked, the classical answer would be to address this question through education, but educational systems are frequently slow and resistant to change, she said. One of the solutions to the labour issue could be a basic income, she said, adding there is no agreement on this principle, and a debate should be launched on the distribution of wealth.

Human-Robot Cooperation

According to Lynne Parker, professor at the University of Tennessee-Knoxville (US), the number of things that only a human can do is shrinking fast.

She suggested a way forward is to consider that machines augment human capabilities, and they can work together to perform better.

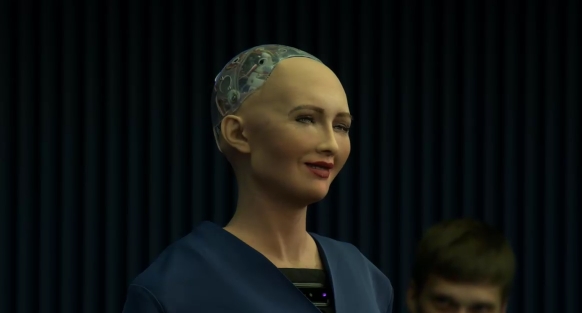

David Hanson, founder and CEO of Hanson Robotics, did not come alone. He was accompanied by Sophia, a robot, which engaged in a dialogue with Hanson, and appeared to be quite happy to be attending the summit.

Sophia, said Hanson, is one of many robots coming on the marketplace, and are made to display emotional intelligence that human find rewarding and valuable.

The ultimate aspiration of robot designers is to make robots “truly alive,” he said, recognising that this is a bit speculative and controversial. If symbolic reasoning could be integrated into the current AI technology, he said, machines can become really smart “and their own being,” and “we know that sounds terrifying and very, very promising.”

Suggestions for the Future

Discussions on the issues of privacy, security, ethics and societal challenges were undertaken in four breakthrough groups, which convened again in the plenary room to share their proposals.

Some of those proposals were to: continue the conversation, bringing together all stakeholders; assign, identify or convene a world governance body or group to lead or coordinate security and privacy issues, and develop these concepts at the international level.

Also suggested was the creation of model laws concerning security and privacy, and to encourage countries to adopt those models independently.

On the ethical developments of AI, the group proposed to set minimum acceptable degrees of transparency for AI decision-making, and to proactively engage developing countries in shaping AI to address risks and benefits to the poor and vulnerable.

On the future of work, the human-centric aspect of AI was underlined by the group. The AI design should accommodate physical, cognitive, literacy, and language limitations, and be sensitive to different cultural norms and communication styles, the group found.

Also suggested by the group was the encoding of ethical, legal, and human-rights criteria in AI systems, as well as the engagement of ethicists, and social scientists.

Image Credits: ITU